Українською читайте тут.

Since the beginning of the full-scale war, the Detector Media team has successfully exposed and reported over two thousand instances of Russian propaganda fakes. These falsified reports range from outrageous claims like the supposed canonization of US President Joe Biden in Ukraine to manipulative disinformation messages such as the false accusation that Ukraine is dragging Moldova into the conflict.

This article will outline the specific tools utilized by the Detector Media team in our efforts to identify and expose photo and video manipulations, track bot networks on social media, and identify credible sources of information about Ukraine and the broader global community, in order to counteract enemy propaganda.

On a daily basis, Russia fabricates or manipulates information with the aim of justifying its military aggression and human rights violations, particularly for its supporters. Furthermore, their disinformation campaign aims to persuade those who do not believe their blatant lies that opposing Russia is futile and that their opponents are, in fact, evil. To achieve this, Russian propagandists have developed sophisticated sets of messages and narratives, which they occasionally supplement with smaller falsehoods and manipulations. Additionally, they utilize various tools, including but not limited to Photoshop, deepfakes, and out-of-context photographs and videos, to discredit their opponents.

It’s important to note that technology can be used not only by propagandists but also by those who are dedicated to combatting disinformation. In the following examples, we will demonstrate how these tools work in practice.

The Virgin Mary with the head of Stepan Bandera. Tools for checking images and videos

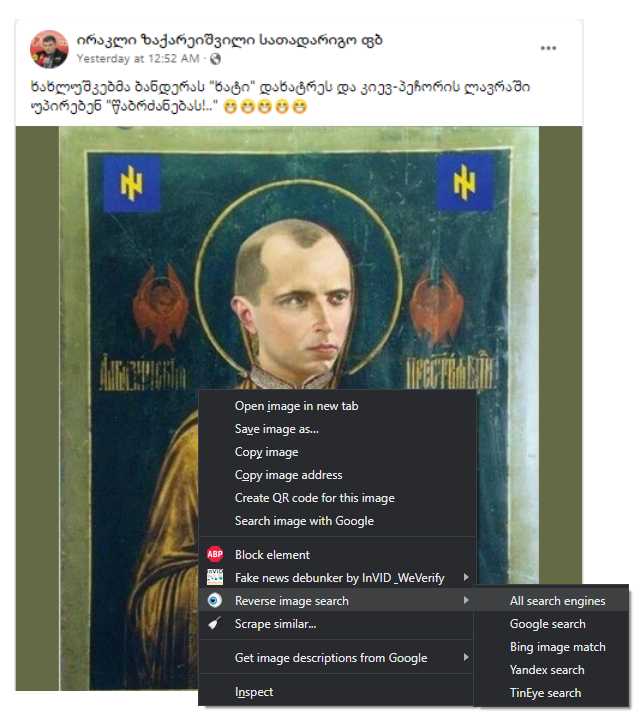

In late January and early February of 2023, a fake news story was disseminated in the Georgian segment of social media claiming that an icon of Ukrainian nationalist leader Stepan Bandera, featuring the symbols of the banned Azov regiment and the Ukrainian coat of arms, was set to be sent to the Kyiv Pechersk Lavra. This fake news was accompanied by supposed photographic evidence. However, the fake was quickly refuted using free browser applications such as TinEye or the InVid Verification Plugin, which require just two clicks to expose the falsehood.

Browser applications such as TinEye and InVid Verification Plugin are incredibly intuitive and easy to use. Simply right-click on the image you want to verify, select the desired program – TinEye or InVid – and choose the search engine(s) you wish to utilize to find similar images. This action will prompt one or more tabs to open, and in most cases, you will be presented with the original images that were used to create the photo you are attempting to verify.

In the specific case of the supposed Stepan Bandera icon, it was discovered that his head had been superimposed on top of the original image of The Mother of God Albazinskaya icon, which is housed in the Russian city of Blagoveshchensk.

Furthermore, the InVid Verification Plugin also has a Forensic tool that utilizes advanced artificial intelligence models to identify sections of an image that have been altered with photo editors. This feature is particularly effective when working with images found on social media or saved on your personal computer.

The process for verifying videos is similar to that of photos. Select keyframes and take screenshots, then use image search applications to look them up. While Amnesty International has attempted to streamline the process of verifying YouTube videos through the Citizenevidence website, the verification of content on other social networks still requires manual effort. However, when it comes to verifying photos and videos from a specific location, it is not always necessary to hunt for originals. In many cases, simply cross-checking the footage against corresponding areas using online maps, search engine images, or social media geolocation features can provide sufficient verification.

Occasionally, Russian propaganda outlets will report that a Ukrainian politician has been publicly ridiculed on a popular foreign TV program or featured on the front page of a foreign publication. Such claims can be quickly verified by conducting a simple search for the program or publication in question, either directly on their websites or through online search engines.

How to check social media users?

During a recent event in Kyiv, social media posts began circulating that claimed that air raid sirens were activated specifically to scare US President Joe Biden upon his arrival. The authors of these posts often shared identical text decrying the supposed cynicism of both Ukrainian and US authorities. The Russian propaganda outlet RT subsequently reposted this fake news on their Telegram channel.

It is often difficult to determine whether a message is intentionally spread as disinformation upon first reading. It is wise to exercise caution when a message is repeated by multiple users on social media. The extent of a disinformation campaign, such as the one surrounding the Biden message, can be gauged through the use of advanced Google searches, employing search operators for specific phrases and dates of publication. Similar advanced searches are also applicable to Facebook and Twitter. Third-party tools, such as whopostedwhat.com for Facebook or exportcomments.com for Twitter, can also prove useful in identifying the extent of a particular disinformation campaign.

Telegram users can employ additional tools, such as intelx.io, to verify whether a message is being distributed in a coordinated manner. This service also supports advanced search on Facebook and Twitter to some extent, making it a versatile tool for identifying and combatting disinformation campaigns on a variety of platforms.

It’s important to note that if a post is shared by multiple users simultaneously, it does not necessarily mean that those users are colluding with one another. For instance, many people may like and share a post independently. Instead, a more effective strategy for identifying disinformation campaigns is to examine whether certain accounts are bots that regularly share the same messages or write similar comments.

To detect bots on Twitter, one can utilize the Botometer website, which assesses the probability that an automated program operates a particular account. To use this tool, simply enter the Twitter account name into the search field. Botometer also checks for bot-like followers and friends of suspicious Twitter users, providing a more comprehensive analysis of potentially problematic accounts.

Within Telegram, identifying coordinated disinformation efforts can be accomplished by examining the similarity of names, nicknames, or logos among Telegram community networks. In addition, bots that coordinate posts for channel networks can also provide insight into potential disinformation campaigns.

In case it is required, additional tools for various social networks can also be located. These platforms undergo frequent updates and modifications, which implies that there is a chance of identifying methods to expose multiple instances of disinformation.

Does Zaluzhnyi’s daughter have a villa abroad? Tools for working with statistical and open data

As the first anniversary of the full-scale war approached, Kremlin media outlets released a report claiming that the daughter of Valeriy Zaluzhnyi, the Commander-in-Chief of the Armed Forces of Ukraine, owned an estate on an island in Chile. However, VoxUkraine’s fact-checking team refuted this claim through two separate methods.

As a starting point, the fact-checking team utilized the FotoForensics tool to examine the documents that were presented as evidence of Zaluzhnyi’s daughter’s ownership. They discovered that the text in the document was likely manipulated. Subsequently, the team cross-checked the registration number of the document on the website of the real estate transaction registration company in Chile, which supposedly issued the certificate, and concluded that the registration number was invalid.

Screenshot of property search by identification code. Source: VoxUkraine

Had VoxUkraine solely relied on the results from the verification tool, the investigation would not have been as compelling. Fortunately, the availability of open data registries, statistical portals, budget reports, opinion poll results, and company information allows for a vast amount of information to be scrutinized, authenticated, or discredited. This is precisely what aids in the prevention of many manipulations.

Undoubtedly, the most significant constraint while working with open data resources is that the information may not be updated frequently enough to meet all of our needs. Moreover, certain resources may necessitate payment, while the data could be presented in foreign languages or inconvenient formats for processing. However, these difficulties can usually be overcome through online research, communication with experts, installation of additional software, and other relevant solutions.

You always have to think. Instead of a conclusion

A considerable number of the falsifications that are uncovered by Detector Media and other fact-checking groups are not merely fabrications that can be verified through open sources or specialized tools. Russian propaganda frequently employs manipulations and messages that are challenging to refute or dissect. Consequently, it is imperative to remain vigilant, remain aware of the wide range of Russian propaganda tactics, and closely monitor the work of fellow fact-checkers and investigative journalists.

In addition to conventional fact-checking methods, the Detector Media team deals with vast amounts of data from social media, which we acquire using artificial intelligence tools. These tools enable us to gather tens of thousands of posts, which are then analyzed to identify pro-Russian disinformation messages. To gain an understanding of the methodology of data collection and analysis employed by the team, further details can be found here.